3. Visualize Synthetic Data¶

3.1. Learning Objectives¶

This example will demonstrate how to build a simple randomized scene and utilize various sensors to collect groundtruth data. The full example can be executed through the Isaac-Sim python environment and in this tutorial we will examine that script section by section.

After this tutorial you should have a basic understanding of how to setup simulations scenes entirely through python.

30-45 min tutorial

3.2. Getting Started¶

To run the example, use the following command.

./python.sh standalone_examples/replicator/visualize_groundtruth.py

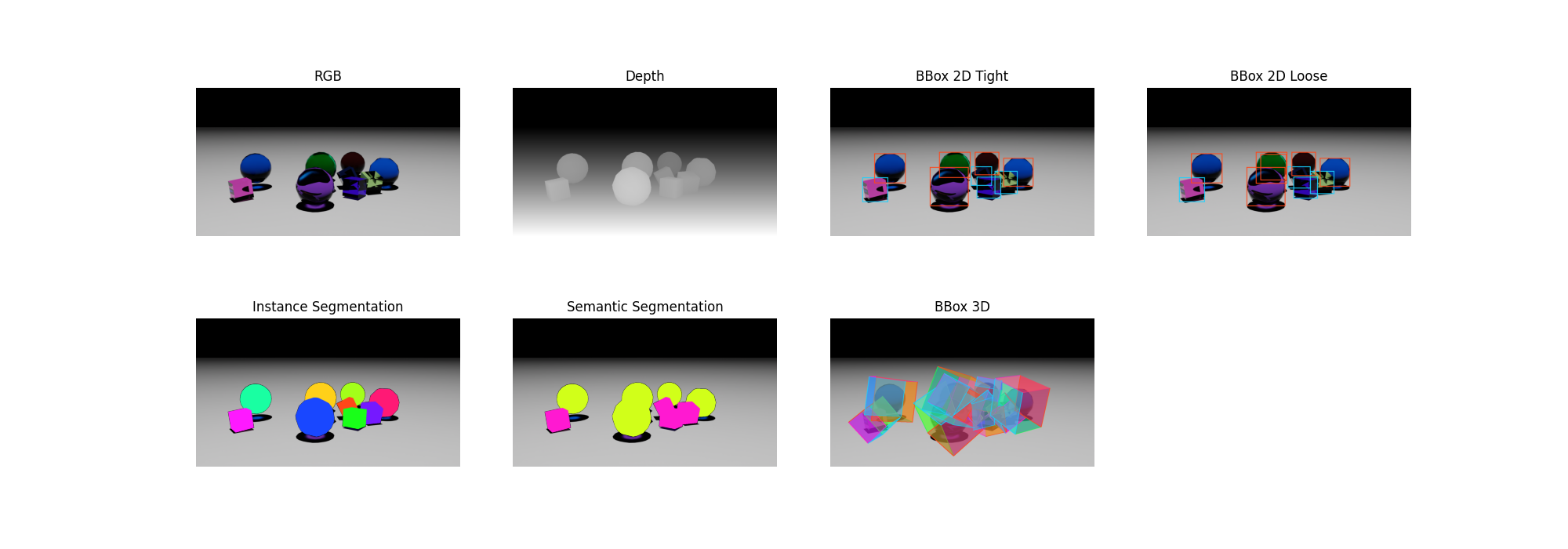

The visualize_groundtruth.py script will generate a number of images in the directory where it is called. They should look something

like the following.

3.3. The Code¶

3.3.1. Setting The stage¶

First, let’s set up the kit app

import copy

import os

import omni

import random

import numpy as np

import matplotlib.pyplot as plt

from omni.isaac.kit import SimulationApp

TRANSLATION_RANGE = 3.0 # meters

NUM_PLOTS = 5

ENABLE_PHYSICS = True

GLASS_MATERIAL = True

simulation_app = SimulationApp({"renderer": "RayTracedLighting", "headless": False})

from omni.isaac.core.objects import DynamicCuboid, DynamicSphere

from omni.isaac.core.materials import OmniGlass, PreviewSurface

from omni.isaac.core.utils.viewports import set_camera_view

from omni.isaac.core.utils.semantics import add_update_semantics

from omni.isaac.core.utils.stage import is_stage_loading

from omni.isaac.core import World

from omni.isaac.synthetic_utils import SyntheticDataHelper

from omni.syntheticdata import visualize, helpers

from pxr import Sdf, UsdLux

The simulation_app manages the loading of kit and all of the extensions used by isaac-sim. Many extensions have associated python functionality, and so

those modules can only be imported after the app is instantiated. Some of these modules define whole classes, like DynamicCuboid or World, while others

are simply libraries of utility functions like set_camera_view.

Next, we need to set the stage. We will create a simple scene consisting of a distant light and then we will populate that scene with an assortment of spheres and cubes.

the_world = World()

the_world.reset()

set_camera_view(eye=np.array([7, 7, 2]), target=np.array([0, 0, 0.5]))

# Add a distant light

light_prim = UsdLux.DistantLight.Define(the_world.scene.stage, Sdf.Path("/DistantLight"))

light_prim.CreateIntensityAttr(500)

if ENABLE_PHYSICS:

# Create a ground plane

the_world.scene.add_ground_plane(size=1000, color=np.array([1, 1, 1]))

The isaac core API simply manages and wraps what USD is already doing under the hood, providing a clean interface to the simulation

for scientists, engineers, and makers. A World object holds a Scene of prims, and provides the ability to play, pause, stop,

update, and render the simulation of that Scene. Note that it’s still pure USD under the hood, and we can add things like a

UsdLux.DistantLight directly if we so choose!

First, we instantiate the_world and call its reset() function, which finalizes the instance by setting up handles needed for things

like PhysX. We are working to remove this need for an initial reset call.

Setting up the camera for the scene uses our viewport utility function set_camera_view, which places a camera at eye pointing at target.

Presently, the core API only supports a single camera, but additional sensors and camera features will be available in the future.

Finally, we can add the physics ground plane directly through the appropriate scene member function. Note that this adds a physics plane, but it is possible to

add any prim to the scene that inherits from omni.isaac.core.prims.xform_prim import XFormPrim, as we will see next

prims = []

path_root = "/World/"

name_prefix = "prim"

for i in range(10):

prim_type = random.choice(["Cube", "Sphere"])

name = name_prefix + str(i)

path = path_root + name

core_prim = DynamicCuboid(path, name) if prim_type == "Cube" else DynamicSphere(path, name)

prims.append(core_prim)

translation = np.random.rand(3) * TRANSLATION_RANGE

color = np.random.rand(3)

if GLASS_MATERIAL:

material = OmniGlass(

path + "/" + name + "_glass", name=name + "_glass", ior=1.25, depth=0.001, thin_walled=False, color=color

)

else:

material = PreviewSurface(path + "/" + name + "_mat", name=name + "_mat", color=color)

core_prim.set_world_pose(translation)

core_prim.apply_visual_material(material)

add_update_semantics(core_prim.prim, prim_type)

the_world.scene.add(core_prim)

print("Waiting until all materials are loaded")

while is_stage_loading():

simulation_app.app.update()

The core objects module contains classes for common prims that a user might need to populate a simple stage.

The DynamicCuboid and DynamicSphere are cube and sphere prims with all of the necessary physics aware schema pre-applied.

The same idea applies to the OmniGlass and PreviewSurface materials; these are USD materials with the core api acting as an interface.

We see this with the use of the utility function add_update_semantics, which applies a semantic label to the prim (either “Sphere” or “Cube”

in this case). Notice that this utility operates on the underlying USD prim, core_prim.prim! We can modify prim attributes directly through USD

or through the member functions of XFormPrim as we do in this example with set_world_pose and apply_visual_material.

Once we finish setting up the prim, we can add it to the simulation scene. In this example we have saved references to our prims

in a list for future access, but because we are using the core API we can also retrieve any prim using either its name or path

using Scene.get_object.

Finally, we want to make sure we give everything time to load properly, so we check with the is_stage_loading utility function,

and update the app. Note that we explicitly use simulation_app.app.update() here, which steps the app (kit) but not the scene.

3.3.2. Retrieving Groundtruth Data¶

Now we’re ready to set up our sensors and collect some data! More information on the sensors can be found here.

viewport = omni.kit.viewport.get_default_viewport_window()

sd_helper = SyntheticDataHelper()

sensor_names = [

"rgb",

"depth",

"boundingBox2DTight",

"boundingBox2DLoose",

"instanceSegmentation",

"semanticSegmentation",

"boundingBox3D",

"camera",

"pose",

]

# # initialize sensors first as it can take several frames before they are ready

sd_helper.initialize(sensor_names=sensor_names, viewport=viewport)

The sensor_names specify what data needs to be exported from the scene. Most of these are captured from the default viewport

camera but some, like pose, are just arrays of data extracted from USD. Once we have the names and the viewport, all we need

to do is initialize the sd_helper. When we want to capture data, we will get a dictionary of arrays keyed by the sensor_names

from the helper.

3.3.3. Visualization¶

Here we make use of the synthetic data extension directly through the use of

the visualize and helper modules. These modules contain useful functions for managing

and plotting data generated by the synthetic data extension.

def make_plots(gt, name_suffix=""):

# GROUNDTRUTH VISUALIZATION

# Setup a figure

_, axes = plt.subplots(2, 4, figsize=(20, 7))

axes = axes.flat

for ax in axes:

ax.axis("off")

# RGB

axes[0].set_title("RGB")

for ax in axes[:-1]:

ax.imshow(gt["rgb"])

# DEPTH

axes[1].set_title("Depth")

depth_data = np.clip(gt["depth"], 0, 255)

axes[1].imshow(visualize.colorize_depth(depth_data.squeeze()))

# BBOX2D TIGHT

axes[2].set_title("BBox 2D Tight")

rgb_data = copy.deepcopy(gt["rgb"])

axes[2].imshow(visualize.colorize_bboxes(gt["boundingBox2DTight"], rgb_data))

# BBOX2D LOOSE

axes[3].set_title("BBox 2D Loose")

rgb_data = copy.deepcopy(gt["rgb"])

axes[3].imshow(visualize.colorize_bboxes(gt["boundingBox2DLoose"], rgb_data))

# INSTANCE SEGMENTATION

axes[4].set_title("Instance Segmentation")

instance_seg = gt["instanceSegmentation"][0]

instance_rgb = visualize.colorize_segmentation(instance_seg)

axes[4].imshow(instance_rgb, alpha=1.0)

# SEMANTIC SEGMENTATION

axes[5].set_title("Semantic Segmentation")

semantic_seg = gt["semanticSegmentation"]

semantic_rgb = visualize.colorize_segmentation(semantic_seg)

axes[5].imshow(semantic_rgb, alpha=1.0)

# BBOX 3D

axes[6].set_title("BBox 3D")

bbox_3d_data = gt["boundingBox3D"]

bboxes_3d_corners = bbox_3d_data["corners"]

projected_corners = helpers.world_to_image(bboxes_3d_corners.reshape(-1, 3), viewport)

projected_corners = projected_corners.reshape(-1, 8, 3)

rgb_data = copy.deepcopy(gt["rgb"])

bboxes3D_rgb = visualize.colorize_bboxes_3d(projected_corners, rgb_data)

axes[6].imshow(bboxes3D_rgb)

# Save figure

print("saving figure to: ", os.getcwd() + "/visualize_groundtruth" + name_suffix + ".png")

plt.savefig("visualize_groundtruth" + name_suffix + ".png")

# Display camera parameters

print("Camera Parameters")

print("==================")

print(gt["camera"])

# Display poses of semantically labelled assets

print("Object Pose")

print("============")

print(gt["pose"])

We are finally ready to generate some data!

for i in range(NUM_PLOTS):

if ENABLE_PHYSICS:

# start simulation

the_world.play()

# Step simulation so that objects fall to rest

print("simulating physics...")

for frame in range(60):

the_world.step(render=False)

print("done")

#the_world.pause()

the_world.render()

gt = sd_helper.get_groundtruth(sensor_names=sensor_names, viewport=viewport, verify_sensor_init=False)

make_plots(gt, name_suffix=str(i))

for core_prim in prims:

translation = np.random.rand(3) * TRANSLATION_RANGE

color = np.random.rand(3)

core_prim.set_world_pose(translation)

core_prim.get_applied_visual_material().set_color(color)

# cleanup

the_world.stop()

simulation_app.close()

For every data sample we want to make we randomize the scene, simulate physics, capture the data, and finally

visualize and save it as an image. Here we can see more of the functionality built into the World class. The

member functions play() and pause() correspond to the Play and Pause buttons in the Kit UI, allowing the simulation

to be moved forward through time using step. Note that we can call step(render=False) to update the physics simulation

in time without the overhead associated with rendering.

After we have run the simulation for 60 frames, we pause physics and call render() to populate our sensors with our new

data. We can then call sd_helper.get_groundtruth to retrieve our data dictionary gt, which we send off to make_plots, which

will generate our image and save it as a .png file. Finally we just move our prims to a new location with new colors, and we repeat.

At the end, we stop the simulation and close the app in order to exit kit gracefully.

3.4. Summary¶

In this tutorial we examined the process of setting up a stage, defining sensors, and then querying those sensors for ground truth data relating to the scene.

3.4.1. Next Steps¶

In the next tutorial, Online Generation, we will import and convert shapenet assets to be used in the generation of synthetic data