3. Using Sensors: LIDAR¶

3.1. Learning Objectives¶

This tutorial introduces how to use a LIDAR for sensing an environment in Omniverse Isaac Sim. After this tutorial, you will know how to add a LIDAR sensor to the scene, activate, and detect objects in simulation.

10-15 Minute Tutorial

3.2. Getting Started¶

Prerequisites

Please review the Essential Tutorial series, especially Hello World, prior to beginning this tutorial.

This tutorial demonstrates how to integrate LIDAR sensors into an Omniverse Isaac Sim simulations. It begins by showing how to add LIDARs to an environment using the User Interface. It then details how to leverage the LIDAR Python API for more advanced control, segmentation, and processing.

The simulated LIDAR works by combining Ray Casting and Physics Collisions to report the distance to detected objects in the environment.

Let’s begin setting up the scene by creating a PhysicsScene and a LIDAR in the environment:

To create a Physics Scene, go to the top Menu Bar and Click Create > Physics > Physics Scene. There should now be a

PhysicsScenePrim in the Stage panel on the right.To create a LIDAR, go to the top Menu Bar and Click Create > Isaac > Sensors > LIDAR > Rotating.

Next, let’s set some of the LIDAR properties for rotation and visualization:

Select the newly created LIDAR prim from the Stage panel.

Once selected, the Property panel to the bottom left will populate with all the available properties of the LIDAR.

Scroll down in the Property panel to the Raw USD Properties section.

Enable the

drawLinescheckbox to enable line rendering.Set the revolutions per second to 1 Hz by setting

rotationRateto1.0.To fire LIDAR rays in all directions at once, set the

rotationRateto0.0.

Note

You can update all of the lidar parameters on the fly while the stage is running. When the rotation rate reaches zero or less, the lidar prim will cast rays in all directions based on your FOV and resolution.

3.3. Setup Collision Detection¶

The LIDAR can only detect objects with Collisions Enabled. Let’s add an object for the LIDAR to detect:

Go to the top Menu Bar and Click Create > Mesh > Cube.

Move the Cube to the position

(200, 0, 0).

Next, add a Physics Collider to the Cube:

With the Cube selected, go to the Property panel and click the

+ Addbutton.Select + Add > Physics > Collider.

Use the mouse and move the Cube around the scene to see how the LIDAR rays interact with the geometry.

3.4. Attach a LIDAR to Geometry¶

For most use cases, LIDARs will be attached to other, more complex assemblies — such as cars or robots. Let’s learn how to attach a LIDAR to other parent geometry. We are going to use a Cylinder as a placeholder for a more complex prim.

Add a Cylinder to the scene and nest the LIDAR prim under it:

Right click in the viewport and select Create > Mesh > Cylinder.

Set the position of the Cylinder to

(0, 0, 0).In the Stage panel, drag-and-drop the

LIDARprim onto theCylinder.This makes the

Cylinderthe parent of theLIDAR. Now when theCylindermoves, theLIDARmoves with it. Moreover, all information reported by the LIDAR is now relative to theCylinder.

Add a offset to LIDAR to precisely position it relative to the Cylinder:

Select the

LIDARprim from the Stage and move it to(50, 50, 0).Now move the

Cylinderaround the environment. The LIDAR maintains this relative transform.Re-select the

LIDARprim and reset its Translate value to its default setting(0, 0, 0).

3.5. Attach a LIDAR to a Moving Robot¶

Similarly, you can attach a LIDAR prim to a robot. For simplicity in creating a moving robot the Carter URDF import example is used.

In the Carter URDF import example press

LOAD,CONFIGUREandMOVEPress play and the Carter robot should drive forward automatically.

Create a

LIDAR, go to the top Menu Bar and Click Create > Isaac > Sensors > LIDAR > Rotating. TheLIDARprim will be created as a child of the selected prim.In the Stage panel, select your

LIDARprim and drag it onto/carter/chassis_linkEnable draw lines and set the rotation rate to zero for easier debugging

Move the

LIDARprim vertically so it is located at the correct height relative to the center of the robot.

3.6. Use the Python API¶

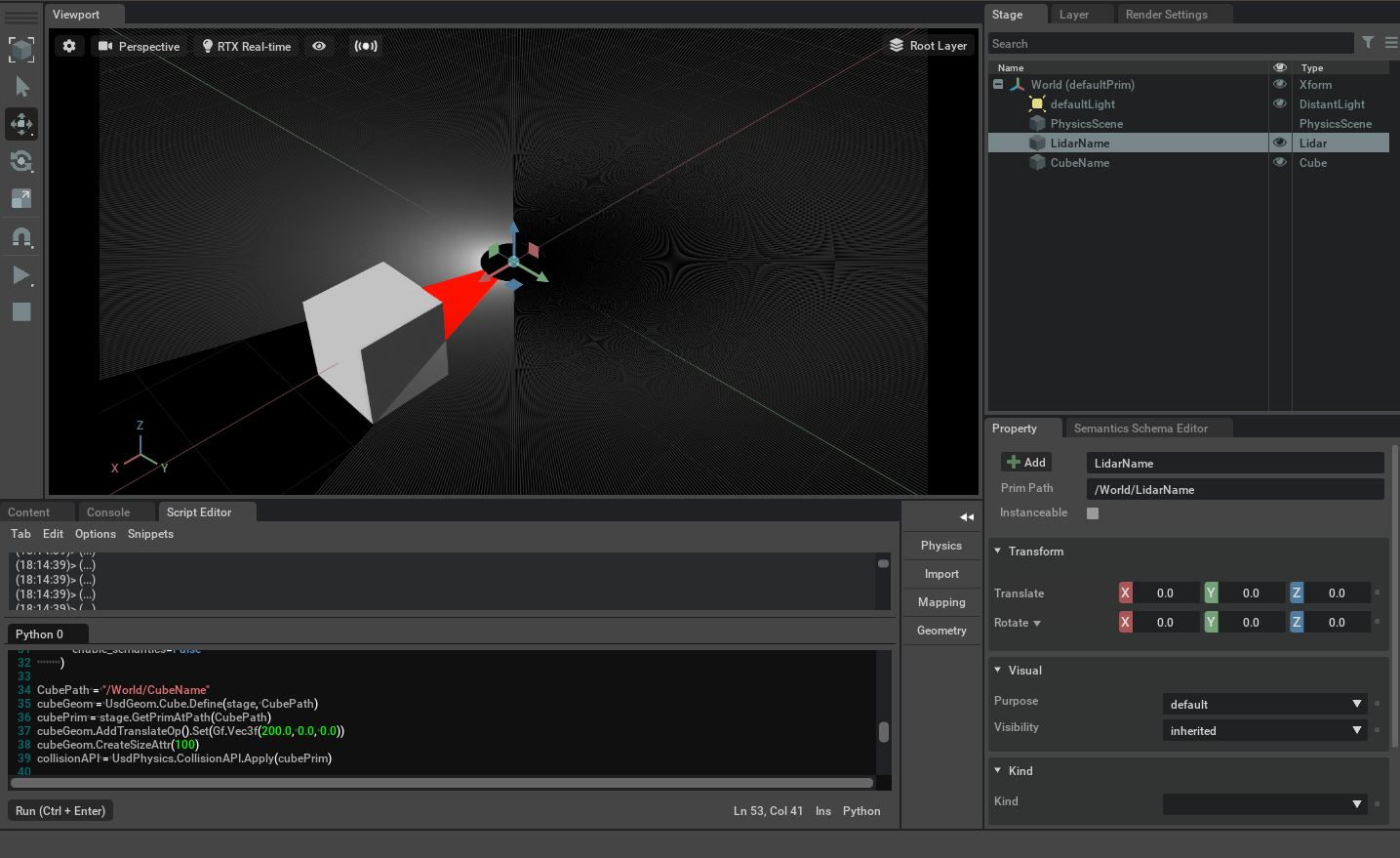

The LIDAR Python API is used to programmatically interact with a LIDAR through scripts and extensions. It can be used to create, control, and query the sensor through scripts and extensions. Let’s use the Script Editor and Python API to retrieve the data from the LIDAR’s last sweep:

Go to the top menu bar and Click Window > Script Editor to open the Script Editor Window.

Add the necessary imports:

1 2 3 4 | import omni # Provides the core omniverse apis

import asyncio # Used to run sample asynchronously to not block rendering thread

from omni.isaac.range_sensor import _range_sensor # Imports the python bindings to interact with lidar sensor

from pxr import UsdGeom, Gf, UsdPhysics # pxr usd imports used to create cube

|

Grab the Stage, Simulation Timeline, and LIDAR Interface:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | stage = omni.usd.get_context().get_stage() # Used to access Geometry

timeline = omni.timeline.get_timeline_interface() # Used to interact with simulation

lidarInterface = _range_sensor.acquire_lidar_sensor_interface() # Used to interact with the LIDAR

# These commands are the Python-equivalent of the first half of this tutorial

omni.kit.commands.execute('AddPhysicsSceneCommand',stage = stage, path='/World/PhysicsScene')

lidarPath = "/LidarName"

result, prim = omni.kit.commands.execute(

"RangeSensorCreateLidar",

path=lidarPath,

parent="/World",

min_range=0.4,

max_range=100.0,

draw_points=False,

draw_lines=True,

horizontal_fov=360.0,

vertical_fov=30.0,

horizontal_resolution=0.4,

vertical_resolution=4.0,

rotation_rate=0.0,

high_lod=False,

yaw_offset=0.0,

enable_semantics=False

)

|

Create an obstacle for the LIDAR:

1 2 3 4 5 6 | CubePath = "/World/CubeName" # Create a Cube

cubeGeom = UsdGeom.Cube.Define(stage, CubePath)

cubePrim = stage.GetPrimAtPath(CubePath)

cubeGeom.AddTranslateOp().Set(Gf.Vec3f(200.0, 0.0, 0.0)) # Move it away from the LIDAR

cubeGeom.CreateSizeAttr(100) # Scale it appropriately

collisionAPI = UsdPhysics.CollisionAPI.Apply(cubePrim) # Add a Physics Collider to it

|

Get the LIDAR data:

The lidar needs a frame of simulation in order to get data for the first frame, so we will start

the simulation by calling timeline.play and waiting for a frame to complete, and then pause simulation using timeline.pause() to populate the depth buffers in the lidar.

Because the simulation is running asynchronously with our script, we use asyncio and ensure_future to wait for our script to complete

calling timeline.pause() is optional, data from the sensor can be gathered anytime while simulating.

1 2 3 4 5 6 7 8 9 10 11 12 13 | async def get_lidar_param(): # Function to retrieve data from the LIDAR

await omni.kit.app.get_app().next_update_async() # wait one frame for data

timeline.pause() # Pause the simulation to populate the LIDAR's depth buffers

depth = lidarInterface.get_linear_depth_data("/World"+lidarPath)

zenith = lidarInterface.get_zenith_data("/World"+lidarPath)

azimuth = lidarInterface.get_azimuth_data("/World"+lidarPath)

print("depth", depth) # Print the data

print("zenith", zenith)

print("azimuth", azimuth)

timeline.play() # Start the Simulation

asyncio.ensure_future(get_lidar_param()) # Only ask for data after sweep is complete

|

Run the full script:

Expand to see full code

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 | # provides the core omniverse apis

import omni

# used to run sample asynchronously to not block rendering thread

import asyncio

# import the python bindings to interact with lidar sensor

from omni.isaac.range_sensor import _range_sensor

# pxr usd imports used to create cube

from pxr import UsdGeom, Gf, UsdPhysics

stage = omni.usd.get_context().get_stage()

lidarInterface = _range_sensor.acquire_lidar_sensor_interface()

timeline = omni.timeline.get_timeline_interface()

omni.kit.commands.execute('AddPhysicsSceneCommand',stage = stage, path='/World/PhysicsScene')

lidarPath = "/LidarName"

result, prim = omni.kit.commands.execute(

"RangeSensorCreateLidar",

path=lidarPath,

parent="/World",

min_range=0.4,

max_range=100.0,

draw_points=False,

draw_lines=True,

horizontal_fov=360.0,

vertical_fov=30.0,

horizontal_resolution=0.4,

vertical_resolution=4.0,

rotation_rate=0.0,

high_lod=False,

yaw_offset=0.0,

enable_semantics=False

)

CubePath = "/World/CubeName"

cubeGeom = UsdGeom.Cube.Define(stage, CubePath)

cubePrim = stage.GetPrimAtPath(CubePath)

cubeGeom.AddTranslateOp().Set(Gf.Vec3f(200.0, 0.0, 0.0))

cubeGeom.CreateSizeAttr(100)

collisionAPI = UsdPhysics.CollisionAPI.Apply(cubePrim)

async def get_lidar_param():

await omni.kit.app.get_app().next_update_async()

timeline.pause()

depth = lidarInterface.get_linear_depth_data("/World"+lidarPath)

zenith = lidarInterface.get_zenith_data("/World"+lidarPath)

azimuth = lidarInterface.get_azimuth_data("/World"+lidarPath)

print("depth", depth)

print("zenith", zenith)

print("azimuth", azimuth)

timeline.play()

asyncio.ensure_future(get_lidar_param())

|

And you should see the following:

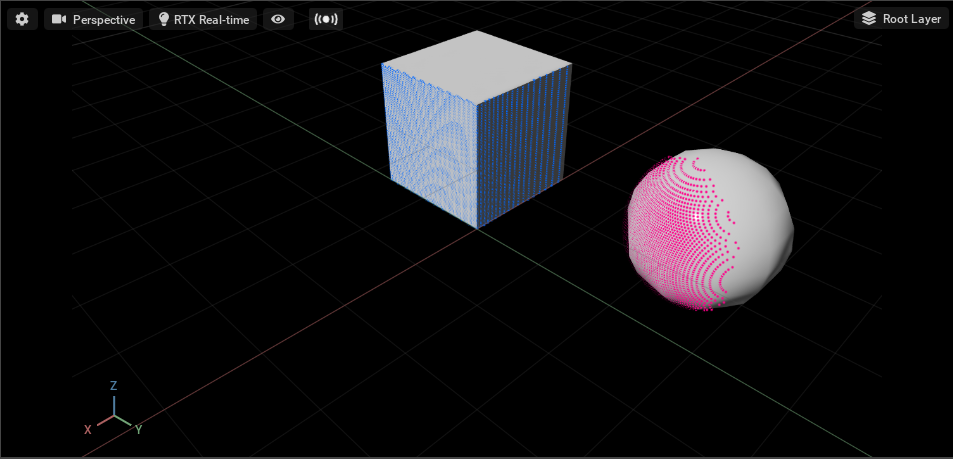

3.7. Segment a Point Cloud¶

This code snippet shows how to add semantic labels to the depth data for segmenting its resulting point cloud.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 | import omni # Provides the core omniverse apis

import asyncio # Used to run sample asynchronously to not block rendering thread

from omni.isaac.range_sensor import _range_sensor # Imports the python bindings to interact with lidar sensor

from pxr import UsdGeom, Gf, UsdPhysics # pxr usd imports used to create cube

stage = omni.usd.get_context().get_stage() # Used to access Geometry

timeline = omni.timeline.get_timeline_interface() # Used to interact with simulation

lidarInterface = _range_sensor.acquire_lidar_sensor_interface() # Used to interact with the LIDAR

# These commands are the Python-equivalent of the first half of this tutorial

omni.kit.commands.execute('AddPhysicsSceneCommand',stage = stage, path='/World/PhysicsScene')

lidarPath = "/LidarName"

# Create lidar prim

result, prim = omni.kit.commands.execute(

"RangeSensorCreateLidar",

path=lidarPath,

parent="/World",

min_range=0.4,

max_range=100.0,

draw_points=True,

draw_lines=False,

horizontal_fov=360.0,

vertical_fov=60.0,

horizontal_resolution=0.4,

vertical_resolution=0.4,

rotation_rate=0.0,

high_lod=True,

yaw_offset=0.0,

enable_semantics=True

)

UsdGeom.XformCommonAPI(prim).SetTranslate((200.0, 0.0, 0.0))

# Create a cube, sphere, add collision and different semantic labels

primType = ["Cube", "Sphere"]

for i in range(2):

prim = stage.DefinePrim("/World/"+primType[i], primType[i])

UsdGeom.XformCommonAPI(prim).SetTranslate((-200.0, -200.0 + i * 400.0, 0.0))

UsdGeom.XformCommonAPI(prim).SetScale((100, 100, 100))

collisionAPI = UsdPhysics.CollisionAPI.Apply(prim)

# Add semantic label

sem = Semantics.SemanticsAPI.Apply(prim, "Semantics")

sem.CreateSemanticTypeAttr()

sem.CreateSemanticDataAttr()

sem.GetSemanticTypeAttr().Set("class")

sem.GetSemanticDataAttr().Set(primType[i])

# Get point cloud and semantic id for lidar hit points

async def get_lidar_param():

await asyncio.sleep(1.0)

timeline.pause()

pointcloud = lidarInterface.get_point_cloud_data("/World"+lidarPath)

semantics = lidarInterface.get_semantic_data("/World"+lidarPath)

print("Point Cloud", pointcloud)

print("Semantic ID", semantics)

timeline.play() # Start the Simulation

asyncio.ensure_future(get_lidar_param()) # Only ask for data after sweep is complete

|

The main difference between this example and the previous

The LIDAR’s

enable_semanticsflag is set toTrueon creation (line 29).The Cube and Sphere prims are assigned different semantic labels (lines 41-46).

get_point_cloud_dataandget_semantic_dataare used to retrieve the Point Cloud data and Semantic IDs (lines 52-53).

The segmented point cloud from lidar sensor should look like the image below:

3.8. Summary¶

This tutorial covered the following topics:

Creating and attaching a LIDAR to geometry using the Omniverse Isaac Sim User Interface.

Creating and controlling a LIDAR using the LIDAR Python API.

Point Cloud Segmentation using the LIDAR and Semantics APIs.

3.8.1. Next Steps¶

See the other Sensor tutorials in our Advanced Tutorials section, Using Sensors: Generic Range Sensor, to learn how to integrate additional range sensors into Omniverse Isaac Sim.

3.8.2. Further Learning¶

For a more in-depth look into the concepts covered in this tutorial, please see the following reference materials:

LIDAR

Try the LIDAR Example at Isaac Examples > Sensors > LIDAR.

Range Sensors

See the Range Sensors Manual for full implementation details.

See the Range Sensor API Documentation for additional usage information.