4. Offline Dataset Generation¶

4.1. Learning Objectives¶

This tutorial demonstrates how to generate synthetic dataset offline which can be used for training deep neural networks. The full example can be executed through the Isaac-Sim python environment and in this

tutorial we will examine standalone_examples/replicator/offline_generation.py to focus understanding the use of the data writers NumpyWriter and KittiWriter, which are in the omni.isaac.synthetic_utils library.

We will also use torch.utils.data.IterableDataset to iterate through different camera positions in the scene and collect data.

After this tutorial you should be able to collect and save sensor data from a stage with sensors and random components in it.

25-30 min tutorial

4.1.1. Prerequisites¶

Although this is a stand alone tutorial, it is recommended that you complete at least Hello World before starting.

To understand the sensors in the scenario, complete the Recording Synthetic Data.

4.2. Getting Started¶

To generate synthetic dataset offline using single or multiple cameras, run the following command.

./python.sh standalone_examples/replicator/offline_generation.py

The above command line has several arguments which if not specified are set to their default values.

--scenario: Specify the USD stage to load from omniverse server for dataset generation.--num_frames: Number of frames to record.--max_queue_size: Maximum size of queue to store and process synthetic data. If value of this field is less than or equal to zero, the queue size is infinite.--data_dir: Location where data will be output. Default is./output--writer_mode: Specify output format - npy or kitti. Default is npy.

When KittiWriter is used with the --writer_mode kitti argument, two more arguments become available.

--classes: Which classes to write labels for. Defaults to all classes.--train_size: Number of frames for training set. Defaults to 8.

With arguments, the above command will look as follows.

./python.sh standalone_examples/replicator/offline_generation.py --scenario omniverse://localhost/Isaac/Samples/Synthetic_Data/Stage/warehouse_with_sensors.usd --num_frames 10 --max_queue_size 500

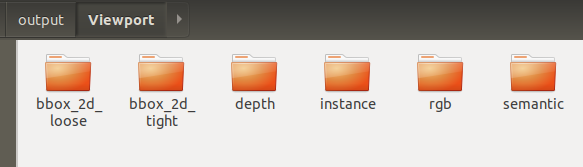

The offline dataset generated can be found in the data_dir and creates the following folders to organize storage.

4.3. Loading the Environment¶

The environment is a USD stage with sensors in it. Loading the stage is done at startup with the load_stage function. By default, the data will be collected with a single camera, but stereo is also possible by setting CREATE_NEW_CAMERA and STEREO_CAMERA to True in offline_generation.py.

Load the stage.

1 2 3 4 5 6 7 8 9 10 11 12 | async def load_stage(self, path):

await omni.usd.get_context().open_stage_async(path)

def _setup_world(self, scenario_path):

# Load scenario

setup_task = asyncio.ensure_future(self.load_stage(scenario_path))

while not setup_task.done():

kit.update()

if CREATE_NEW_CAMERA:

self._create_camera_rig(stereo=STEREO_CAMERA)

kit.update()

|

4.4. Saving Sensor Output¶

We render frame by frame, get corresponding synthetic sensor output and write it to disk within __next__ function.

The __next__ function.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | def __next__(self):

# Enable/disable sensor output and their format

sensor_settings_viewport = {

"rgb": {"enabled": True},

"depth": {"enabled": True, "colorize": True, "npy": True},

"instance": {"enabled": True, "colorize": True, "npy": True},

"semantic": {"enabled": True, "colorize": True, "npy": True},

"bbox_2d_tight": {"enabled": True, "colorize": True, "npy": True},

"bbox_2d_loose": {"enabled": True, "colorize": True, "npy": True},

}

self._sensor_settings["Viewport"] = copy.deepcopy(sensor_settings_viewport)

if STEREO_CAMERA:

self._sensor_settings["Viewport 2"] = copy.deepcopy(sensor_settings_viewport)

# step once and then wait for materials to load

self.dr.commands.RandomizeOnceCommand().do()

kit.update()

from omni.isaac.core.utils.stage import is_stage_loading

while is_stage_loading():

kit.update()

num_worker_threads = 4

# Write to disk

if self.data_writer is None:

print(f"Writing data to {self.data_dir}")

if self.writer_mode == "kitti":

self.data_writer = self.writer_helper(

self.data_dir,

num_worker_threads,

self.max_queue_size,

self.train_size,

self.classes,

bbox_type="BBOX2DLOOSE",

)

else:

self.data_writer = self.writer_helper(

self.data_dir, num_worker_threads, self.max_queue_size, self._sensor_settings

)

self.data_writer.start_threads()

image = self._capture_viewport("Viewport", self._sensor_settings["Viewport"])

if STEREO_CAMERA:

image = self._capture_viewport("Viewport 2", self._sensor_settings["Viewport 2"])

self.cur_idx += 1

return image

|

With lines 4-11, we specify the synthetic sensors whose output we want to save. In order to save the output as a colorized image (.png), we specify a colorize flag per sensor.

Similarly, to save the output as a numpy array (.npy), we specify a npy flag per sensor.

If the loaded USD stage has DR components, line 17 will randomize the scene once.

For example, Isaac/Samples/Synthetic_Data/Stage/warehouse_with_sensors.usd contains a DR movement component with a camera attached.

Hence, the location of the camera randomizes whenever the RandomizeOnce() call happens.

Notice also lines 21-22, where we ensure that all materials are loaded before getting synthetic sensor output.

4.5. Writing Output to Disk¶

The _capture_viewport function.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 | def _capture_viewport(self, viewport_name, sensor_settings):

print("capturing viewport:", viewport_name)

viewport = self.viewport_iface.get_viewport_window(self.viewport_iface.get_instance(viewport_name))

if not viewport:

carb.log_error("Viewport Not found, cannot capture")

return

groundtruth = {

"METADATA": {

"image_id": str(self.cur_idx),

"viewport_name": viewport_name,

"DEPTH": {},

"INSTANCE": {},

"SEMANTIC": {},

"BBOX2DTIGHT": {},

"BBOX2DLOOSE": {},

},

"DATA": {},

}

gt_list = []

if sensor_settings["rgb"]["enabled"]:

gt_list.append("rgb")

if sensor_settings["depth"]["enabled"]:

gt_list.append("depthLinear")

if sensor_settings["bbox_2d_tight"]["enabled"]:

gt_list.append("boundingBox2DTight")

if sensor_settings["bbox_2d_loose"]["enabled"]:

gt_list.append("boundingBox2DLoose")

if sensor_settings["instance"]["enabled"]:

gt_list.append("instanceSegmentation")

if sensor_settings["semantic"]["enabled"]:

gt_list.append("semanticSegmentation")

# on the first frame make sure sensors are initialized

if self.cur_idx == 0:

self.sd_helper.initialize(sensor_names=gt_list, viewport=viewport)

kit.update()

kit.update()

# Render new frame

kit.update()

# Collect Groundtruth

gt = self.sd_helper.get_groundtruth(gt_list, viewport)

# RGB

image = gt["rgb"]

if sensor_settings["rgb"]["enabled"] and gt["state"]["rgb"]:

groundtruth["DATA"]["RGB"] = gt["rgb"]

# Depth

if sensor_settings["depth"]["enabled"] and gt["state"]["depthLinear"]:

groundtruth["DATA"]["DEPTH"] = gt["depthLinear"].squeeze()

groundtruth["METADATA"]["DEPTH"]["COLORIZE"] = sensor_settings["depth"]["colorize"]

groundtruth["METADATA"]["DEPTH"]["NPY"] = sensor_settings["depth"]["npy"]

# Instance Segmentation

if sensor_settings["instance"]["enabled"] and gt["state"]["instanceSegmentation"]:

instance_data = gt["instanceSegmentation"][0]

groundtruth["DATA"]["INSTANCE"] = instance_data

groundtruth["METADATA"]["INSTANCE"]["WIDTH"] = instance_data.shape[1]

groundtruth["METADATA"]["INSTANCE"]["HEIGHT"] = instance_data.shape[0]

groundtruth["METADATA"]["INSTANCE"]["COLORIZE"] = sensor_settings["instance"]["colorize"]

groundtruth["METADATA"]["INSTANCE"]["NPY"] = sensor_settings["instance"]["npy"]

# Semantic Segmentation

if sensor_settings["semantic"]["enabled"] and gt["state"]["semanticSegmentation"]:

semantic_data = gt["semanticSegmentation"]

semantic_data[semantic_data == 65535] = 0 # deals with invalid semantic id

groundtruth["DATA"]["SEMANTIC"] = semantic_data

groundtruth["METADATA"]["SEMANTIC"]["WIDTH"] = semantic_data.shape[1]

groundtruth["METADATA"]["SEMANTIC"]["HEIGHT"] = semantic_data.shape[0]

groundtruth["METADATA"]["SEMANTIC"]["COLORIZE"] = sensor_settings["semantic"]["colorize"]

groundtruth["METADATA"]["SEMANTIC"]["NPY"] = sensor_settings["semantic"]["npy"]

# 2D Tight BBox

if sensor_settings["bbox_2d_tight"]["enabled"] and gt["state"]["boundingBox2DTight"]:

groundtruth["DATA"]["BBOX2DTIGHT"] = gt["boundingBox2DTight"]

groundtruth["METADATA"]["BBOX2DTIGHT"]["COLORIZE"] = sensor_settings["bbox_2d_tight"]["colorize"]

groundtruth["METADATA"]["BBOX2DTIGHT"]["NPY"] = sensor_settings["bbox_2d_tight"]["npy"]

groundtruth["METADATA"]["BBOX2DTIGHT"]["WIDTH"] = image.shape[1]

groundtruth["METADATA"]["BBOX2DTIGHT"]["HEIGHT"] = image.shape[0]

# 2D Loose BBox

if sensor_settings["bbox_2d_loose"]["enabled"] and gt["state"]["boundingBox2DLoose"]:

groundtruth["DATA"]["BBOX2DLOOSE"] = gt["boundingBox2DLoose"]

groundtruth["METADATA"]["BBOX2DLOOSE"]["COLORIZE"] = sensor_settings["bbox_2d_loose"]["colorize"]

groundtruth["METADATA"]["BBOX2DLOOSE"]["NPY"] = sensor_settings["bbox_2d_loose"]["npy"]

groundtruth["METADATA"]["BBOX2DLOOSE"]["WIDTH"] = image.shape[1]

groundtruth["METADATA"]["BBOX2DLOOSE"]["HEIGHT"] = image.shape[0]

self.data_writer.q.put(groundtruth)

return image

|

In the last part of __next__ the data writer is created to gather synthetic sensor output.

When KittiWriter is chosen to gather data, notice that the train_size and classes options are used.

In the function _capture_viewport we get synthetic sensor output for each sensor and setup groundtruth dictionary with additional information

before pushing it to the data writer (line 91) which saves it in data_dir.

Note: The offline dataset generated can be found locally in unique folders corresponding to each camera.

4.6. Summary¶

This tutorial covered the following topics:

Use the

NumpyWriterandKittiWriterdata writersCreate a

torch.utils.data.IterableDatasetclass to iterate through different camera positions in the scene and collect data.

4.6.1. Next Steps¶

One possible use for the data that was created is the Transfer Learning Toolkit.

Once the generated synthetic data is in Kitti format, we can proceed to using the Transfer Learning Toolkit to train a model. The Transfer Learning Toolkit provides segmentation, classification and object detection models. For this example, we will be looking into an object detection use case, using the Detectnet V2 model.

To get started with TAO, follow the set up instructions. Then, activate the virtual environment and download the Jupyter Notebooks as explained in detail here.

TAO uses jupyter notebooks to guide through the training process. In the folder cv_samples_v1.3.0, you will find notebooks for multiple models. For this use case, refer to any of the object detection networks. We will refer to Detectnet_V2.

In the detectnet_v2 folder, you will find the jupyter notebook and the specs folder. The documentation as mentioned here, goes into details about this sample. TAO works with configuration files that can be found in the specs folder. Here you need to modify the specs to refer to the generated synthetic data as the input.

To prepare the data you need to run the below command.

tao detectnet_v2 dataset-convert [-h] -d DATASET_EXPORT_SPEC -o OUTPUT_FILENAME [-f VALIDATION_FOLD]

This is in the jupyter notebook with a sample configuration. Modify the spec file to match the folder structure of your synthetic data.

After that, the data will be in TFrecord format and is ready for training. Again, the spec file for training has to be changed to represent the path to the synthetic data and the classes being detected.

tao detectnet_v2 train [-h] -k <key>

-r <result directory>

-e <spec_file>

[-n <name_string_for_the_model>]

[--gpus <num GPUs>]

[--gpu_index <comma separate gpu indices>]

[--use_amp]

[--log_file <log_file>]

For any questions regarding the Transfer Learning Toolkit, we encourage you to check out the documentation of TAO which goes into further details.

4.6.2. Further Learning¶

To learn how to use Omniverse Isaac Sim to create data sets in an interactive manner, see the Synthetic Data Recorder, and then visualize them with the Synthetic Data Visualizer.