Annotators Information

Annotators provide ground truth annotations corresponding to the rendered scene. Within replicator, there are multiple off-the-shelf annotators, each of which is described below.

Annotator Registry

The annotator registry is where all of the Annotators are. To get access to them, you can use the function omni.replicator.core.annotators.get().

The current annotators that are available through the registry are:

LdrColor |

PtDirectIllumation |

primPaths |

|---|---|---|

HdrColor |

PtGlobalIllumination |

SemanticOcclusionPostRender |

SmoothNormal |

PtReflections |

bounding_box_2d_tight_fast |

BumpNormal |

PtRefractions |

bounding_box_2d_tight |

AmbientOcclusion |

PtSelfIllumination |

bounding_box_2d_loose_fast |

Motion2d |

PtBackground |

bounding_box_2d_loose |

DiffuseAlbedo |

PtWorldNormal |

bounding_box_3d_fast |

SpecularAlbedo |

PtWorldPos |

bounding_box_3d |

Roughness |

PtZDepth |

semantic_segmentation |

DirectDiffuse |

PtVolumes |

InstanceIdSegmentationPostRender |

DirectSpecular |

PtDiffuseFilter |

instance_id_segmentation_fast |

Reflections |

PtReflectionFilter |

instance_id_segmentation |

IndirectDiffuse |

PtRefractionFilter |

InstanceSegmentationPostRender |

DepthLinearized |

PtMultiMatte0 |

instance_segmentation_fast |

EmissionAndForegroundMask |

PtMultiMatte1 |

instance_segmentation |

PtMultiMatte2 |

CameraParams |

|

PtMultiMatte3 |

BackgroundRand |

|

PtMultiMatte4 |

skeleton_prims |

|

PtMultiMatte5 |

skeleton_data |

|

PtMultiMatte6 |

pointcloud |

|

PtMultiMatte7 |

DispatchSync |

|

PostProcessDispatch |

||

PostProcessDispatchUngated |

Some annotators support initialization parameters. For example, segmentation annotators can be parametrized with a colorize attribute specify the output format.

omni.replicator.core.annotators.get("semantic_segmentation", init_params={"colorize": True})

To see how annotators are used within a writer, we have prepared scripts that implement the basic writer which covers all standard annotators. How to get there is shown in Scripts for Replicator.

Annotator Output

Below is a short description of the output. For more information you can consult the script annotator_registry.py. (Follow instructions in Scripts for Replicator to find them).

LdrColor

Annotator Name: LdrColor, (alternative name: rgb)

The LdrColor or rgb annotator produces the low dynamic range output image as an array of type np.uint8 with shape (width, height, 4), where the four channels correspond to R,G,B,A.

Example

import omni.replicator.core as rep

async def test_ldr():

# Add Default Light

distance_light = rep.create.light(rotation=(315,0,0), intensity=3000, light_type="distant")

cone = rep.create.cone()

cam = rep.create.camera(position=(500,500,500), look_at=cone)

rp = rep.create.render_product(cam, (1024, 512))

ldr = rep.AnnotatorRegistry.get_annotator("LdrColor")

ldr.attach(rp)

await rep.orchestrator.step_async()

data = ldr.get_data()

print(data.shape, data.dtype) # ((512, 1024, 4), uint8)

import asyncio

asyncio.ensure_future(test_ldr())

Normals

Annotator Name: normals

The normals annotator produces an array of type np.float32 with shape (height, width, 4).

The first three channels correspond to (x, y, z). The fourth channel is unused.

Example

import omni.replicator.core as rep

async def test_normals():

# Add Default Light

distance_light = rep.create.light(rotation=(315,0,0), intensity=3000, light_type="distant")

cone = rep.create.cone()

cam = rep.create.camera(position=(500,500,500), look_at=cone)

rp = rep.create.render_product(cam, (1024, 512))

normals = rep.AnnotatorRegistry.get_annotator("normals")

normals.attach(rp)

await rep.orchestrator.step_async()

data = normals.get_data()

print(data.shape, data.dtype) # ((512, 1024, 4), float32)

import asyncio

asyncio.ensure_future(test_normals())

Bounding Box 2D Loose

Annotator Name: bounding_box_2d_loose

Outputs a “loose” 2d bounding box of each entity with semantics in the camera’s field of view.

Loose bounding boxes bound the entire entity regardless of occlusions.

Output Format

The bounding box annotator returns a dictionary with the bounds and semantic id found under the data key, while other information is under the info key: idToLabels, bboxIds and primPaths.

{

"data": np.dtype(

[

("semanticId", "<u4"), # Semantic identifier which can be transformed into a readable label using the `idToLabels` mapping

("x_min", "<i4"), # Minimum bounding box pixel coordinate in x (width) axis in the range [0, width]

("y_min", "<i4"), # Minimum bounding box pixel coordinate in y (height) axis in the range [0, height]

("x_max", "<i4"), # Maximum bounding box pixel coordinate in x (width) axis in the range [0, width]

("y_max", "<i4"), # Maximum bounding box pixel coordinate in y (height) axis in the range [0, height]

('occlusionRatio', '<f4')]), # **EXPERIMENTAL** Occlusion percentage, where `0.0` is fully visible and `1.0` is fully occluded. See additional notes below.

],

"info": {

"idToLabels": {<semanticId>: <semantic_labels>}, # mapping from integer semantic ID to a comma delimited list of associated semantics

"bboxIds": [<bbox_id_0>, ..., <bbox_id_n>], # ID specific to bounding box annotators allowing easy mapping between different bounding box annotators.

"primPaths": [<prim_path_0>, ... <prim_path_n>], # prim path tied to each bounding box

}

}

Note

bounding_box_2d_loose will produce the loose 2d bounding box of any prim in the viewport, no matter if is partially occluded or fully occluded.

occlusionRatio can only provide valid values for prims composed of a single mesh. Multi-mesh labelled prims will return a value of -1 indicating that no occlusion value is available.

Example

import omni.replicator.core as rep

import omni.syntheticdata as sd

async def test_bbox_2d_loose():

# Add Default Light

distance_light = rep.create.light(rotation=(315,0,0), intensity=3000, light_type="distant")

cone = rep.create.cone(semantics=[("prim", "cone")], position=(100, 0, 0))

sphere = rep.create.sphere(semantics=[("prim", "sphere")], position=(-100, 0, 0))

invalid_type = rep.create.cube(semantics=[("shape", "boxy")], position=(0, 100, 0))

# Setup semantic filter

sd.SyntheticData.Get().set_instance_mapping_semantic_filter("prim:*")

cam = rep.create.camera(position=(500,500,500), look_at=cone)

rp = rep.create.render_product(cam, (1024, 512))

bbox_2d_loose = rep.AnnotatorRegistry.get_annotator("bounding_box_2d_loose")

bbox_2d_loose.attach(rp)

await rep.orchestrator.step_async()

data = bbox_2d_loose.get_data()

print(data)

# {

# 'data': array([

# (0, 443, 198, 581, 357, 0.0),

# (1, 245, 92, 375, 220, 0.3823),

# dtype=[('semanticId', '<u4'),

# ('x_min', '<i4'),

# ('y_min', '<i4'),

# ('x_max', '<i4'),

# ('y_max', '<i4'),

# ('occlusionRatio', '<f4')]),

# 'info': {

# 'bboxIds': array([0, 1], dtype=uint32),

# 'idToLabels': {'0': {'prim': 'cone'}, '1': {'prim': 'sphere'}},

# 'primPaths': ['/Replicator/Cone_Xform', '/Replicator/Sphere_Xform']}

# }

# }

import asyncio

asyncio.ensure_future(test_bbox_2d_loose())

Bounding Box 2D Tight

Annotator Name: bounding_box_2d_tight

Outputs a “tight” 2d bounding box of each entity with semantics in the camera’s viewport.

Tight bounding boxes bound only the visible pixels of entities. Completely occluded entities are ommited.

Output Format

The bounding box annotator returns a dictionary with the bounds and semantic id found under the data key, while other information is under the info key: idToLabels, bboxIds and primPaths.

{

"data": np.dtype(

[

("semanticId", "<u4"), # Semantic identifier which can be transformed into a readable label using the `idToLabels` mapping

("x_min", "<i4"), # Minimum bounding box pixel coordinate in x (width) axis in the range [0, width]

("y_min", "<i4"), # Minimum bounding box pixel coordinate in y (height) axis in the range [0, height]

("x_max", "<i4"), # Maximum bounding box pixel coordinate in x (width) axis in the range [0, width]

("y_max", "<i4"), # Maximum bounding box pixel coordinate in y (height) axis in the range [0, height]

('occlusionRatio', '<f4')]), # **EXPERIMENTAL** Occlusion percentage, where `0.0` is fully visible and `1.0` is fully occluded. See additional notes below.

],

"info": {

"idToLabels": {<semanticId>: <semantic_labels>}, # mapping from integer semantic ID to a comma delimited list of associated semantics

"bboxIds": [<bbox_id_0>, ..., <bbox_id_n>], # ID specific to bounding box annotators allowing easy mapping between different bounding box annotators.

"primPaths": [<prim_path_0>, ... <prim_path_n>], # prim path tied to each bounding box

}

}

Note

bounding_box_2d_tight bounds only visible pixels.

occlusionRatio can only provide valid values for prims composed of a single mesh.

Multi-mesh labelled prims will return a value of -1 indicating that no occlusion value is available.

Example

import omni.replicator.core as rep

import omni.syntheticdata as sd

async def test_bbox_2d_tight():

# Add Default Light

distance_light = rep.create.light(rotation=(315,0,0), intensity=3000, light_type="distant")

cone = rep.create.cone(semantics=[("prim", "cone")], position=(100, 0, 0))

sphere = rep.create.sphere(semantics=[("prim", "sphere")], position=(-100, 0, 0))

invalid_type = rep.create.cube(semantics=[("shape", "boxy")], position=(0, 100, 0))

# Setup semantic filter

sd.SyntheticData.Get().set_instance_mapping_semantic_filter("prim:*")

cam = rep.create.camera(position=(500,500,500), look_at=cone)

rp = rep.create.render_product(cam, (1024, 512))

bbox_2d_tight = rep.AnnotatorRegistry.get_annotator("bounding_box_2d_tight")

bbox_2d_tight.attach(rp)

await rep.orchestrator.step_async()

data = bbox_2d_tight.get_data()

print(data)

# {

# 'data': array([

# (0, 443, 198, 581, 357, 0.0),

# (1, 245, 94, 368, 220, 0.3823),

# dtype=[('semanticId', '<u4'),

# ('x_min', '<i4'),

# ('y_min', '<i4'),

# ('x_max', '<i4'),

# ('y_max', '<i4'),

# ('occlusionRatio', '<f4')]),

# 'info': {

# 'bboxIds': array([0, 1], dtype=uint32),

# 'idToLabels': {'0': {'prim': 'cone'}, '1': {'prim': 'sphere'}},

# 'primPaths': ['/Replicator/Cone_Xform', '/Replicator/Sphere_Xform']}

# }

# }

import asyncio

asyncio.ensure_future(test_bbox_2d_tight())

Bounding Box 3D

Annotator Name: bounding_box_3d

Outputs 3D bounding box of each entity with semantics in the camera’s viewport.

Output Format

The bounding box annotator returns a dictionary with the bounds and semantic id found under the data key, while other information is under the info key: idToLabels, bboxIds and primPaths.

{

"data": np.dtype(

[

("semanticId", "<u4"), # Semantic identifier which can be transformed into a readable label using the `idToLabels` mapping

("x_min", "<i4"), # Minimum bound in x axis in local reference frame (in world units)

("y_min", "<i4"), # Minimum bound in y axis in local reference frame (in world units)

("x_max", "<i4"), # Maximum bound in x axis in local reference frame (in world units)

("y_max", "<i4"), # Maximum bound in y axis in local reference frame (in world units)

("z_min", "<i4"), # Minimum bound in z axis in local reference frame (in world units)

("z_max", "<i4"), # Maximum bound in z axis in local reference frame (in world units)

("transform", "<i4"), # Local to world transformation matrix (transforms the bounds from local frame to world frame)

('occlusionRatio', '<f4')]), # **EXPERIMENTAL** Occlusion percentage, where `0.0` is fully visible and `1.0` is fully occluded. See additional notes below.

],

"info": {

"idToLabels": {<semanticId>: <semantic_labels>}, # mapping from integer semantic ID to a comma delimited list of associated semantics

"bboxIds": [<bbox_id_0>, ..., <bbox_id_n>], # ID specific to bounding box annotators allowing easy mapping between different bounding box annotators.

"primPaths": [<prim_path_0>, ... <prim_path_n>], # prim path tied to each bounding box

}

}

Note

bounding_box_3d are generated regardless of occlusion.

bounding box dimensions (<axis>_min, <axis>_max) are expressed in stage units.

bounding box transform is expressed in the world reference frame.

occlusionRatio can only provide valid values for prims composed of a single mesh.

Multi-mesh labelled prims will return a value of -1 indicating that no occlusion value is available.

Example

import omni.replicator.core as rep

import omni.syntheticdata as sd

async def test_bbox_3d():

# Add Default Light

distance_light = rep.create.light(rotation=(315,0,0), intensity=3000, light_type="distant")

cone = rep.create.cone(semantics=[("prim", "cone")], position=(100, 0, 0))

sphere = rep.create.sphere(semantics=[("prim", "sphere")], position=(-100, 0, 0))

invalid_type = rep.create.cube(semantics=[("shape", "boxy")], position=(0, 100, 0))

# Setup semantic filter

sd.SyntheticData.Get().set_instance_mapping_semantic_filter("prim:*")

cam = rep.create.camera(position=(500,500,500), look_at=cone)

rp = rep.create.render_product(cam, (1024, 512))

bbox_3d = rep.AnnotatorRegistry.get_annotator("bounding_box_3d")

bbox_3d.attach(rp)

await rep.orchestrator.step_async()

data = bbox_3d.get_data()

print(data)

# {

# 'data': array([

# (0, -50., -50., -50., 50., 49.9999, 50., [[ 1., 0., 0., 0.], [ 0., 1., 0., 0.], [ 0., 0., 1., 0.], [ 100., 0., 0., 1.]]),

# (1, -50., -50., -50., 50., 50. , 50., [[ 1., 0., 0., 0.], [ 0., 1., 0., 0.], [ 0., 0., 1., 0.], [-100., 0., 0., 1.]]),

# dtype=[('semanticId', '<u4'),

# ('x_min', '<i4'),

# ('y_min', '<i4'),

# ('x_max', '<i4'),

# ('y_max', '<i4'),

# ("z_min", "<i4"),

# ("z_max", "<i4"),

# ("transform", "<i4")]),

# 'info': {

# 'bboxIds': array([0, 1], dtype=uint32),

# 'idToLabels': {'0': {'prim': 'cone'}, '1': {'prim': 'sphere'}},

# 'primPaths': ['/Replicator/Cone_Xform_03', '/Replicator/Sphere_Xform_03']}

# }

# }

import asyncio

asyncio.ensure_future(test_bbox_3d())

Distance to Camera

Annotator Name: distance_to_camera

Outputs a depth map from objects to camera positions. The distance_to_camera annotator produces a 2d array of types np.float32 with 1 channel.

Data Details

The unit for distance to camera is in meters (For example, if the object is 1000 units from the camera, and the meters_per_unit variable of the scene is 100, the distance to camera would be 10).

0 in the 2d array represents infinity (which means there is no object in that pixel).

Distance to Image Plane

Annotator Name: distance_to_image_plane

Outputs a depth map from objects to image plane of the camera. The distance_to_image_plane annotator produces a 2d array of types np.float32 with 1 channel.

Data Details

The unit for distance to image plane is in meters (For example, if the object is 1000 units from the image plane of the camera, and the meters_per_unit variable of the scene is 100, the distance to camera would be 10).

0 in the 2d array represents infinity (which means there is no object in that pixel).

Semantic Segmentation

Annotator Name: semantic_segmentation

Outputs semantic segmentation of each entity in the camera’s viewport that has semantic labels.

Initialization Parameters

colorize(bool): whether to output colorized semantic segmentation or non-colorized one.

Output Format

Semantic segmentation image:

If

colorizeis set to true, the image will be a 2d array of typesnp.uint8with 4 channels.Different colors represent different semantic labels.

If

colorizeis set to false, the image will be a 2d array of typesnp.uint32with 1 channel, which is the semantic id of the entities.

ID to labels json file:

If

colorizeis set to true, it will be the mapping from color to semantic labels.If

colorizeis set to false, it will be the mapping from semantic id to semantic labels.

Note

The semantic labels of an entity will be the semantic labels of itself, plus all the semantic labels it inherit from its parent, and semantic labels with same type will be concatenated, separated by comma.

For example, if an entity has a semantic label of [{“class”: “cube”}], and its parent has [{“class”: “rectangle”}].

Then the final semantic labels of that entity will be [{“class”: “rectangle, cube”}].

Instance ID Segmentation

Annotator Name: instance_id_segmentation

Outputs instance id segmentation of each entity in the camera’s viewport. The instance id is unique for each prim in the scene with different paths.

Initialization Parameters

colorize(bool): whether to output colorized semantic segmentation or non-colorized one.

Output Format

Instance ID segmentation image:

If

colorizeis set to true, the image will be a 2d array of typesnp.uint8with 4 channels.Different colors represent different instance ids.

If

colorizeis set to false, the image will be a 2d array of typesnp.uint32with 1 channel, which is the instance id of the entities.

ID to labels json file:

If

colorizeis set to true, it will be the mapping from color to usd prim path of that entity.If

colorizeis set to false, it will be the mapping from instance id to usd prim path of that entity.

Note

The instance id is assigned in a way that each of the leaf prim in the scene will be assigned to an instance id, no matter if it has semantic labels or not.

Instance Segmentation

Annotator Name: instance_segmentation

Outputs instance segmentation of each entity in the camera’s viewport. The main difference between instance id segmentation and instance segmentation are that instance segmentation annotator goes down the hierarchy to the lowest level prim which has semantic labels, which instance id segmentation always goes down to the leaf prim.

Initialization Parameters

colorize(bool): whether to output colorized instance segmentation or non-colorized one.

Output Format

Instance segmentation image:

If

colorizeis set to true, the image will be a 2d array of typesnp.uint8with 4 channels.Different colors represent different semantic instances.

If

colorizeis set to false, the image will be a 2d array of typesnp.uint32with 1 channel, which is the instance id of the semantic entities.

ID to labels json file:

If

colorizeis set to true, it will be the mapping from color to usd prim path of that semantic entity.If

colorizeis set to false, it will be the mapping from instance id to usd prim path of that semantic entity.

ID to semantic json file:

If

colorizeis set to true, it will be the mapping from color to semantic labels of that semantic entity.If

colorizeis set to false, it will be the mapping from instance id to semantic labels of that semantic entity.

Note

Two prims with same semantic labels but live in different USD path will have different ids. If two prims have no semantic labels, and they have a same parent which has semantic labels, they will be classified as the same instance.

Point Cloud

Annotator Name: pointcloud

Outputs a 2D array of shape (N, 3) representing the points sampled on the surface of the prims in the viewport, where N is the number of point.

Output Format

The pointcloud annotator returns positions of the points found under the data key.

Additional information is under the info key: pointRgb, pointNormals and pointSemantic.

{

"data": np.dtype(np.float32), # position value of each point of shape (N, 3)

"info": {

"idToLabels": {<semanticId>: <semantic_labels>}, # rgb value of each point of shape (N, 4)

"bboxIds": [<bbox_id_0>, ..., <bbox_id_n>], # normal value of each point of shape (N, 3)

"primPaths": [<prim_path_0>, ... <prim_path_n>], # semantic ids of each point of shape (N)

}

}

Data Details

Point positions are in the world space.

Sample resolution is determined by the resolution of the render product.

Note

To get the mapping from semantic id to semantic labels, pointcloud annotator is better used with semantic segmentation annotator, and users can extract the idToLabels data from the semantic segmentation annotator.

Example 1

Pointcloud annotator captures prims seen in the camera, and sampled the points on the surface of the prims, based on the resolution of the render product attached to the camera.

Additional to the points sampled, it also outputs rgb, normals and semantic id values associated to the prim where that point belongs to.

For prims without any valid semantic labels, pointcloud annotator will ignore it.

import asyncio

import omni.replicator.core as rep

async def test_pointcloud():

# Add Default Light

distance_light = rep.create.light(rotation=(315,0,0), intensity=3000, light_type="distant")

# Pointcloud only capture prims with valid semantics

W, H = (1024, 512)

cube = rep.create.cube(position=(0, 0, 0), semantics=[("class", "cube")])

camera = rep.create.camera(position=(200., 200., 200.), look_at=cube)

render_product = rep.create.render_product(camera, (W, H))

pointcloud_anno = rep.annotators.get("pointcloud")

pointcloud_anno.attach(render_product)

await rep.orchestrator.step_async()

pc_data = pointcloud_anno.get_data()

print(pc_data)

# {

# 'data': array([...], shape=(<num_points>, 3), dtype=float32),

# 'info': {

# 'pointNormals': array([...], shape=(<num_points> * 4), dtype=float32),

# 'pointRgb': array([...], shape=(<num_points> * 4), dtype=uint8),

# 'pointSemantic': array([...], shape=(<num_points>), dtype=uint8),

# }

# }

asyncio.ensure_future(test_pointcloud())

Example 2

In this example, we demonstrate a scenario where multiple camera captures are taken to produce a more complete pointcloud, utilizing the excellent

open3d library to export a coloured ply file.

import os

import asyncio

import omni.replicator.core as rep

import open3d as o3d

import numpy as np

async def test_pointcloud():

# Pointcloud only capture prims with valid semantics

cube = rep.create.cube(semantics=[("class", "cube")])

camera = rep.create.camera()

render_product = rep.create.render_product(camera, (1024, 512))

pointcloud_anno = rep.annotators.get("pointcloud")

pointcloud_anno.attach(render_product)

# Camera positions to capture the cube

camera_positions = [(500, 500, 0), (-500, -500, 0), (500, 0, 500), (-500, 0, -500)]

with rep.trigger.on_frame(num_frames=len(camera_positions)):

with camera:

rep.modify.pose(position=rep.distribution.sequence(camera_positions), look_at=cube) # make the camera look at the cube

# Accumulate points

points = []

points_rgb = []

for _ in range(len(camera_positions)):

await rep.orchestrator.step_async()

pc_data = pointcloud_anno.get_data()

points.append(pc_data["data"])

points_rgb.append(pc_data["info"]["pointRgb"].reshape(-1, 4)[:, :3])

# Output pointcloud as .ply file

ply_out_dir = os.path.join(os.path.dirname(os.path.realpath(__file__)), "out")

os.makedirs(ply_out_dir, exist_ok=True)

pc_data = np.concatenate(points)

pc_rgb = np.concatenate(points_rgb)

pcd = o3d.geometry.PointCloud()

pcd.points = o3d.utility.Vector3dVector(pc_data)

pcd.colors = o3d.utility.Vector3dVector(pc_rgb)

o3d.io.write_point_cloud(os.path.join(ply_out_dir, "pointcloud.ply"), pcd)

asyncio.ensure_future(test_pointcloud())

Skeleton Data

Annotator Name: skeleton_data

The skeleton data annotator outputs pose information about the skeletons in the scene view.

Output Format

skeleton json file.

Data Details

This annotator returns data as a single string held in a dictionary with the key skeleton_data. Use eval(data["skeleton_data"]) to extract the available attributes shown below:

Parameter

Description

skeleton_parents

Which joint is the parent of the index, -1 is root

rest_local_rotations

Local rotation of each join at rest

rest_local_translations

Local translation of each join at rest

skel_name

Name of the skeleton

skel_path

Path of the skeleton

global_translations

Global translation of each joint

local_rotations

Local rotation of each joint

skeleton_joints

Name of each joint

translations_2d

Projected 2d points of each joint

in_view

If the skeleton is in view of the camera

Example

Below is an example script that outputs 10 images with skeleton pose annotation.

import asyncio

import omni.replicator.core as rep

# Define paths for the character

PERSON_SRC = 'omniverse://localhost/NVIDIA/Assets/Characters/Reallusion/Worker/Worker.usd'

async def test_skeleton_data():

# Add Default Light

distance_light = rep.create.light(rotation=(315,0,0), intensity=3000, light_type="distant")

# Human Model

person = rep.create.from_usd(PERSON_SRC, semantics=[('class', 'person')])

# Area to scatter cubes in

area = rep.create.cube(scale=2, position=(0.0, 0.0, 100.0), visible=False)

# Create the camera and render product

camera = rep.create.camera(position=(25, -421.0, 182.0), rotation=(77.0, 0.0, 3.5))

render_product = rep.create.render_product(camera, (1024, 1024))

def randomize_spheres():

spheres = rep.create.sphere(scale=0.1, count=100)

with spheres:

rep.randomizer.scatter_3d(area)

return spheres.node

rep.randomizer.register(randomize_spheres)

with rep.trigger.on_frame(interval=10, num_frames=5):

rep.randomizer.randomize_spheres()

# Attach annotator

skeleton_anno = rep.annotators.get("skeleton_data")

skeleton_anno.attach(render_product)

await rep.orchestrator.step_async()

data = skeleton_anno.get_data()

print(data)

# {

# 'skeletonData': '[{

# "skeleton_parents": [-1, 0, ..., 78, 99],

# "rest_global_translations": [[0.0, 0.0, 0.0], [0.0, 2.82, 100.41], ..., [1.0, -0.0, 0.0, 0.0], [1.0, -0.0, 0.0, 0.0]],

# "rest_local_translations": [[0.0, 0.0, 0.0], [0.0, 2.82, 100.41], ..., [0.0, 0.0, 0.0], [-0.0, 12.92, 0.01]],

# "skel_name": "Worker",

# "skel_path": "/Replicator/Ref_Xform/Ref/ManRoot/Worker/Worker",

# "asset_path": "Bones/Worker.StandingDiscussion_LookingDown_M.usd",

# "animation_variant": null,

# "global_translations": [[-0.00, 0.031, 0.0], [0.07, 1.17, 96.29], ..., [-17.57, 3.97, 141.99], [-21.63, 2.61, 129.80]],

# "local_rotations": [[1.0, 0.0, 0.0, 0.0], [0.74, 0.67, 0.0, -0.0], ..., [1.0, -0.0, 0.087, -0.0], [1.0, 0.0, -0.08694560302873298, 0.0]],

# "skeleton_joints": ["RL_BoneRoot", "RL_BoneRoot/Hip", ..., "RL_BoneRoot/Hip/Waist/Spine01/Spine02/R_Clavicle/R_Upperarm/R_UpperarmTwist01", "RL_BoneRoot/Hip/Waist/Spine01/Spine02/R_Clavicle/R_Upperarm/R_UpperarmTwist01/R_UpperarmTwist02"],

# "translations_2d": [[513.94, 298.00], [514.41, 543.59], ..., [466.33, 669.42], [455.12, 635.45]],

# "in_view": true

# }]'

# }

# Evaluate string to extract data

skeleton_data = eval(data)

asyncio.ensure_future(test_skeleton_data())

Motion Vectors

Annotator Name: motion_vectors

Outputs a 2D array of motion vectors representing the relative motion of a pixel in the camera’s viewport between frames.

Output Format

The

motion_vectorsannotator produces a 2darray of typesnp.float32with 4 channels.

Data Details

Each value is a normalized direction in 3D space

Note

The values represent motion relative to camera space.

Cross Correspondence

Annotator Name: cross_correspondence

The cross correspondence annotator outputs a 2D array representing the camera optical flow map of the camera’s viewport against a reference viewport.

Output Format

The cross_correspondence annotator produces a 2d array of types np.float32 with 4 channels.

Data Details

The components of each entry in the 2D array represent for different values encoded as floating point values:

x: dx - difference to the x value of of the corresponding pixel in the reference viewport. This value is normalized to

[-1.0, 1.0]y: dy - difference to the y value of of the corresponding pixel in the reference viewport. This value is normalized to

[-1.0, 1.0]z: occlusion mask - boolean signifying that the pixel is occluded or truncated in one of the cross referenced viewports. Floating point value represents a boolean

(1.0 = True, 0.0 = False)w: geometric occlusion calculated - boolean signifying that the pixel can or cannot be tested as having occluded geometry (e.g. no occlusion testing is performed on missed rays)

(1.0 = True, 0.0 = False)

Other Notes

Invalid data is returned as

[-1.0, -1.0, -1.0, -1.0]

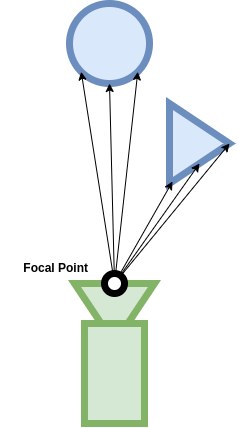

Camera Parameters

Annotator Name: camera_params

The Camera Parameters annotator returns the camera details for the camera corresponding to the render product to which the annotator is attached.

Data Details

Parameter |

Description |

|---|---|

cameraFocalLength |

Camera focal length |

cameraFocusDistance |

Camera focus distance |

cameraFStop |

Camera fStop value |

cameraAperture |

Camera horizontal and vertical aperture |

cameraApertureOffset |

Camera horizontal and vertical aperture offset |

renderProductResolution |

RenderProduct resolution |

cameraModel |

Camera model name |

cameraViewTransform |

Camera to world transformation matrix |

cameraProjection |

Camera projection matrix |

cameraFisheyeNominalWidth |

Camera fisheye nominal width |

cameraFisheyeNominalHeight |

Camera fisheye nominal height |

cameraFisheyeOpticalCentre |

Camera fisheye optical centre |

cameraFisheyeMaxFOV |

Camera fisheye maximum field of view |

cameraFisheyePolynomial |

Camera fisheye polynomial |

cameraNearFar |

Camera near/far clipping range |

Example

import asyncio

import omni.replicator.core as rep

async def test_camera_params():

camera_1 = rep.create.camera()

camera_2 = rep.create.camera(

position=(100, 0, 0),

projection_type="fisheye_polynomial"

)

render_product_1 = rep.create.render_product(camera, (1024, 1024))

render_product_2 = rep.create.render_product(camera, (1024, 1024))

anno_1 = rep.annotators.get("CameraParams").attach(render_product_1)

anno_2 = rep.annotators.get("CameraParams").attach(render_product_2)

await rep.orchestrator.step_async()

print(anno_1)

# {'cameraAperture': array([20.955 , 15.2908], dtype=float32),

# 'cameraApertureOffset': array([0., 0.], dtype=float32),

# 'cameraFisheyeMaxFOV': 0.0,

# 'cameraFisheyeNominalHeight': 0,

# 'cameraFisheyeNominalWidth': 0,

# 'cameraFisheyeOpticalCentre': array([0., 0.], dtype=float32),

# 'cameraFisheyePolynomial': array([0., 0., 0., 0., 0.], dtype=float32),

# 'cameraFocalLength': 24.0,

# 'cameraFocusDistance': 400.0,

# 'cameraFStop': 0.0,

# 'cameraModel': 'pinhole',

# 'cameraNearFar': array([1., 1000000.], dtype=float32),

# 'cameraProjection': array([ 2.291, 0. , 0. , 0. ,

# 1. , 2.291, 0. , 0. ,

# 1. , 0. , 0. , -1. ,

# 1. , 0. , 1. , 0. ]),

# 'cameraViewTransform': array([1., 0., 0., 0., 0., 1., 0., 0., 0., 0., 1., 0., 0., 0., 0., 1.]),

# 'metersPerSceneUnit': 0.009999999776482582,

# 'renderProductResolution': array([1024, 1024], dtype=int32)

# }

print(anno_2)

# {

# 'cameraAperture': array([20.955 , 15.291], dtype=float32),

# 'cameraApertureOffset': array([0., 0.], dtype=float32),

# 'cameraFisheyeMaxFOV': 200.0,

# 'cameraFisheyeNominalHeight': 1216,

# 'cameraFisheyeNominalWidth': 1936,

# 'cameraFisheyeOpticalCentre': array([970.9424, 600.375 ], dtype=float32),

# 'cameraFisheyePolynomial': array([0. , 0.002, 0. , 0. , 0. ], dtype=float32),

# 'cameraFocalLength': 24.0,

# 'cameraFocusDistance': 400.0,

# 'cameraFStop': 0.0,

# 'cameraModel': 'fisheyePolynomial',

# 'cameraNearFar': array([1., 1000000.], dtype=float32),

# 'cameraProjection': array([ 2.291, 0. , 0. , 0. ,

# 1. , 2.291, 0. , 0. ,

# 2. , 0. , 0. , -1. ,

# 3. , 0. , 1. , 0. ]),

# 'cameraViewTransform': array([1., 0., 0., 0., 0., 1., 0., 0., 0., 0., 1., 0., -100., 0., 0., 1.]),

# 'metersPerSceneUnit': 0.009999999776482582,

# 'renderProductResolution': array([1024, 1024], dtype=int32)

# }

asyncio.ensure_future(test_camera_params())