Containerizing a Kit Application

Overview

This document outlines how to containerize and locally test a Kit application in preparation for deploying it to an Omniverse Application Streaming instance or any suitable Kubernetes cluster.

Setting Up the Development Environment

- Before getting started, let’s make sure your development environment is amenable to the steps needed to publish.

- A Linux-based PC or VM with

sudorights. Highly suggest using VMs so you can easily rebuild them as necessary. 12 vCPUs

32GB of RAM

230GB storage

Ubuntu 22.04 (or equivalent)

- A Linux-based PC or VM with

- On the Linux VM/PC you will need the following

~30GB free disk space, depending on the required dependencies for your application.

cURL -

$ sudo apt install curlDocker - See installation section below

nvidia-container-toolkit - See installation section below

Docker installation

Docker can be installed using instructions from the Docker website: https://docs.docker.com/engine/install/ubuntu/ Docker-CE on Ubuntu can be setup using Docker’s official convenience script: https://docs.docker.com/engine/install/ubuntu/#install-using-the-convenience-script Copy the entire block of text and paste it into your SSH window. From curl to docker.

$ sudo curl https://get.docker.com | sh && sudo systemctl --now enable docker

This may take a moment to complete.

Verify the installation fo docker by running Hello World to verify that Docker is working

$ sudo docker run hello-world

Additionally, install the Docker nvidia-container-toolkit using these steps:

$ sudo curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list |

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' |

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

$ sudo sed -i -e '/experimental/ s/^#//g' /etc/apt/sources.list.d/nvidia-container-toolkit.list

$ sudo apt-get update

$ sudo apt-get install -y nvidia-container-toolkit

Configure Docker to use the NVIDIA runtime:

$ sudo nvidia-ctk runtime configure --runtime=docker

$ sudo systemctl restart docker

To run Docker without requiring sudo then use these steps: https://docs.docker.com/engine/install/linux-postinstall/#manage-docker-as-a-non-root-user

$ sudo groupadd docker

$ sudo usermod -aG docker $USER

NOTE: The group named docker may already exist.

To start the build process, create a separate directory for the build environment. In this example, the director is named dockerfile

$ sudo mkdir /opt/dockerfile

$ sudo chmod 777 -R /opt/dockerfile

Install the nvidia-container-toolkit

Follow the standard installation instructions to install Docker and the nvidia-container-toolkit on the system you are using to create the my_app container image. Enabling non-sudo support for Docker, which is described in the official Docker post-install guide, can ease development and is reflected in the instructions below. Appending “sudo” will otherwise be required, which may have other implications around file and folder permissions.

Note

It is important to make sure you configure the NVIDIA container runtime with Docker by following the Configure Docker section of the instructions.

Resources

Any previously packaged application in the form of a zip file. For example, we will use my_app.zip below, but any properly-built Kit application should work. Any additional resources or dependencies you wish to containerize.

Basic Steps

Perform a clean release build of your application. Filter out any files which should not be released.

Create a fat application package from your application.

Create a container from the fat application package.

Note

For in-depth information on how to develop, build, and package a Kit application refer to the kit-app-template documentation.

Create a Clean Release Build

We want to ensure that we are starting from a clean foundation.

# cd into the directory where your source is located

$ cd /home/horde/src/my_app

# clean the build directory

$ ./build.sh -c

# build a release version

$ ./build.sh -r

...

BUILD (RELEASE) SUCCEEDED (Took XX.YY seconds)

# confirm that you see the above message when the build ends.

Create a Fat Application Package

In order to create a container image of a Kit application, we must ensure that we have all of its required dependencies. Creating a fat application package leverages the NVIDIA provided repo tool to do exactly that. It identifies all dependencies required by the Kit application and packages them, in a structured way, into a completely contained .zip file.

$ ./repo.sh package

Packaging app (fat package)...

...

Package Created: /home/horde/src/my_app/_build/packages/kit-app-template-fat@2023.2.1+my_app.0.92902f4b.local.linux-x86_64.release.zip (Took 226.32 seconds)

# ensure that you see something similar to the above message when the packaging ends

This creates a much larger .zip file (1.8GB or more) of your application in the _build/packages directory

Creating the container

In order to create a container from an Kit application package, we must have a few things: Installed versions of Docker and the nvidia-container-toolkit (make sure you follow the Configure Docker section in the instructions) A base container to build on top of, containing the appropriate version of Kit (105.1.2 or later) A Docker file which contains the information for how to build the container and which is contained in the my_app repo. Any other resources which are intended to be installed with the rest of the image.

Normally, you’d access data from an external data source such as a Nucleus server or a cloud provider’s blob storage and specify a path or URL when instantiating the Kit application instance. Here is an example of adding resources to a “data” folder for your app

# cd into the root of the my_app repo directory

$ cd /home/horde/src/my_app

# Create a "data" directory in the root of the repo directory

$ mkdir data

# Copy the unzipped asset folder into the data directory. In this instance, the asset.zip is in the user's Download folder.

$ cp -rp ~/Download/my-asset

Review the Dockerfile

A Docker file is used to describe how a Docker container image should be assembled. The default name is “Dockerfile” and you can find this one in the repo.

# Use the Kit 105.1.2 streaming base image available publicly via NVIDIA NGC

FROM nvcr.io/nvidia/omniverse/ov-kit-appstreaming:105.1.2-135279.16b4b239

# Cleanup embedded kit-sdk-launcher package as my_app is a full package with kit-sdk

RUN rm -rf /opt/nvidia/omniverse/kit-sdk-launcher

# Copy the application package from the _build/packages directory into the containers /app directory.

COPY --chown=ubuntu:ubuntu _build/packages/ /app/

# Unzip the application package into the container's /app directory and then delete the application package

WORKDIR /app

RUN PACKAGE_FILE=$(find . -type f -name "*.release.zip") && unzip $PACKAGE_FILE -d . && rm $PACKAGE_FILE

# Pull in any additional required dependencies

RUN /app/pull_kit_sdk.sh

# Copy in the resource data if available. This is commented out and should only be enabled if you have additional data.

#COPY --chown=ubuntu:ubuntu data /app/data

# Copy the ovc Kit file from the repos source/apps directory into the container image.

COPY --chown=ubuntu:ubuntu source/apps/my_app.ovc.kit /app/apps/my_app.ovc.kit

# Copy the startup.sh script from the repos source/scripts directory.

# This is what will be called when the container image is started.

COPY --chown=ubuntu:ubuntu source/scripts/startup.sh /startup.sh

RUN chmod +x /startup.sh

# This specifies the container's default entrypoint that will be called by "> docker run".

ENTRYPOINT [ "/startup.sh" ]

The two most important lines are highlighted (above).

The first specifies the base image to use to build the my_app image. This should point to the publicly available version of Kit in NVIDIA NGC that matches your application, currently

105.1.2, that is suitable for streaming.The second bold line specifies that the contents of the data directory, at the root of the cloned repository, should be copied into the container’s

/app/datadirectory. If you have copied your assets into the data directory, as described in a previous step, uncomment this command.

Once the Dockerfile has been reviewed and updated, you are ready to build it.

Find the Correct Base Image to Use

The base image must match the version of Kit your application, and any required extensions, were built with and include functionality for efficient deployment to a kubernetes cluster (i.e. support for cluster-wide shader-caching).

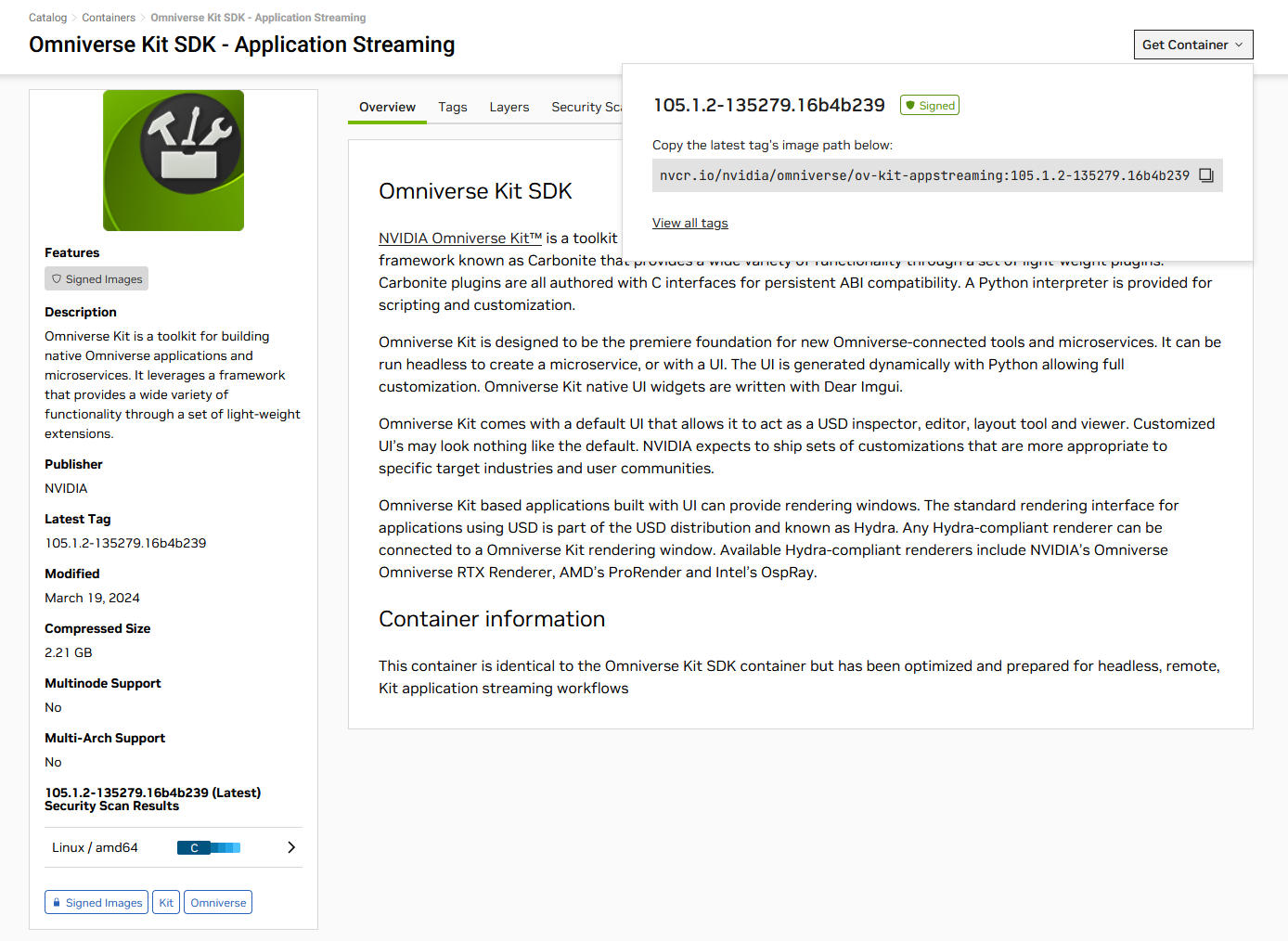

Below is a screenshot of the base-image url obtained from NGC that points to the ov-kit-appstreaming image used for containerizing:

Note

At the time of creating this document, the above Docker file is pointing to the correct base image in NVIDIA’s NGC for Kit 105.1.2.

This NGC link (the same as above) finds all “Omniverse” containers with “Kit”. Select the Omniverse Kit SDK - Application Streaming tile. You can use the Get Container menu (top-right) to get the latest image or select the View all tags link to see all versions that are available.

Build the Container

Building a container is straightforward, once you have a properly configured Dockerfile

# Make sure we are in the my_app root directory

$ cd /home/horde/src/my_app

# Run the docker command to build the container image of the my_app Kit application, using the tag "my_app", version 0.2.0

$ docker build . -t my_app:0.2.0

...

=> => writing image sha256:9f3738248a15b521fa48ba4b83c9f12e31542b5352ac0638bb523628c25a 0.0s

=> => naming to docker.io/library/my_app:0.2.0

# confirm the above line

# To view the local docker images, including the new one you just created

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

my_app 0.2.0 9f37382d4574 2 minutes ago 12.4GB

This will build the container image locally, stored in Docker’s internal directory structure.

This will build the container image locally, stored in Docker’s internal directory structure.

Running the Container

Now that we have created the resulting container, we want to ensure that it runs. We will follow a similar process for testing the container as we did when trying running the newly built usd-viewer Kit application. We will run the container, which will load the application, and start the streaming process. To test, we will directly connect to it via a client-side app as we did previously, no changes required.

# Run the container, using the nvidia runtime and all available GPUs, and host networking

$ docker run --runtime=nvidia --gpus all --net=host my_app

This starts the my_app container running. Other than the output in the shell, you will not see anything.

Note

If your application contains rendering scenes, it can take several minutes to compile all of the shaders required for the rendered materials.

The Omniverse Application Streaming API platform leverages a shared shader cache to mitigate subsequent shader compilation across all of the pods in the cluster. However, when running locally from a container image, this is not available.

Connecting to the Running Container via a local viewer app in Local Mode

Let’s begin with the assumption that you are either testing with one of NVIDIA’s sample client apps, or you have created your own.

Note

Chrome is the preferred browser.

# cd into the directory where you extracted the usd-viewer-streaming-sample

$ cd /home/horde/src/my-viewer-streaming-app

# Install all of the dependencies

$ npm install

# Run the sample web application

$ npm run dev

VITE v5.0.10 ready in 456 ms

➜ Local: http://localhost:5173/

➜ Network: use --host to expose

➜ press h + enter to show help

Fixing a Black Screen

This can occur if the shaders are still being compiled when the web client connects to the streaming Kit application. Depending on the number of unique shaders, it can take several minutes to compile all of them. Closing and re-opening the browser tab is usually the most effective method for resolving this issue. When deployed to a Omniverse Application Streaming API instance, this is mitigated by leveraging a shared shader cache across all of the pods in the cluster.

Shut It Down

You can shut down the client application in the usual manner.

To stop the container:

# List all of the running containers

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

67742e0ce56e my_app:0.2.0 "/startup.sh" 17 seconds ago Up 12 seconds gifted_hellman

b72d62d539d3 my_other_app:1.3.7 "python /app/linux_e…" 3 weeks ago Up 2 days

# Find and kill the usd-viewer:0.2.0 container

$ docker kill gifted_hellman

Congratulations, you have a streamable Kit application ready for deployment to any Kubernetes cluster, such as an Omniverse Application Streaming instance.

Next Steps

There are a few additional steps required to deploy your locally built usd-viewer container image to an Omniverse Application Streaming Instance, which are outside the scope of this document and likely require assistance from members of your cloud infrastructure team.